Thousands of bugs are reported every week. How could you possibly stay on top of that situation? Well, you cannot. At least not without tool support. A first step is to connect bug reports that describe the same issue. In this paper, we show how the state-of-the-art search engine library can be used for duplicate detection – a fast and scalable solution for large projects.

This paper reports results from a MSc. thesis project by Jens Johansson. The project started with the goal to improve bug duplicate detection by going beyond textual features. Our idea was to represent bugs with all other available metadata in bug trackers, e.g., severity, dates, and submitter location. We decided to run experiments on bugs from the Android development project – a very large project with a public issue tracker. I really like this paper, and I honestly think it deserves more citations…

A conceptual replication with Apache Lucene

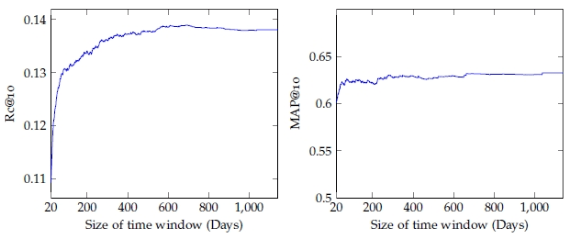

Adding more bug features didn’t make much of a difference, at least we found no major improvements during the short project. It didn’t even help to filter bug reports by submission data, keeping all bugs in the index gave the best results. In the end, we decided to primarily compare our results to a previous paper on bug duplicate detection by conducting a conceptual replication – we tried another tool (Apache Lucene instead of a classic VSM implementation) on another dataset (Android instead of bugs from Sony Mobile). In total we study 20,175 bug reports in the Android dataset – the same data provided for the MSR’12 mining challenge. As in the original Sony Mobile study, we investigate how weighting the textual content of the title and description differently when indexing bugs impacts the results.

Our findings are threefold: 1) we show IR can be useful to find bug duplicates in practice. In the original 40% of the duplicates were found, in our replication about 15% were identified among the top-10 recommendations. 2) We get the best results when weighting the title as three times more important than the description, confirming previous claims that the title should be up-weighted. 3) We show that previous evaluation setups might be too simple – bug reports often appear in clusters (also discussed in our previous study) and it is not at all simple to tell which one is the “master report”. An experimental evaluation needs to consider all duplicate relations, not just from one single master.

The main contribution of the paper is that we show how Apache Lucene, a state-of-the-art open source search engine library, can be use out-of-the-box to find duplicated bug reports. It’s really such a simple idea – full text indexing of bug descriptions and using incoming text from incoming bugs as queries. Unfortunately, many bug trackers used in industry offer awful findability. Investing in a standard search solution can help a lot!

Implications for Research

- Apache Lucene offers a scalable out-of-the-box solution to find textual similarities – well worth a try before implementing your own IR techniques.

- Up-weight the text in the title when detecting bug duplicates – for Android it is thrice as important as the full description.

- Bug relations might be more complicated than one master to many duplicates – a tool evaluation should consider all the many-to-many relations.

Implications for Practice

- Make sure the full text of bug reports is indexed in the bug tracker – it helps developers find related bugs quickly.

- Apache Lucene is an open source library that can easily be integrated in existing tools.

Markus Borg, Per Runeson, Jens Johansson, and Mika Mäntylä. In Proc. of the 8th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Article No. 8, Torino, Italy, 2014. (link, preprint)

Abstract

Context: Duplicate detection is a fundamental part of issue management. Systems able to predict whether a new defect report will be closed as a duplicate, may decrease costs by limiting rework and collecting related pieces of information. Goal: Our work explores using Apache Lucene for large-scale duplicate detection based on textual content. Also, we evaluate the previous claim that results are improved if the title is weighted as more important than the description. Method: We conduct a conceptual replication of a well-cited study conducted at Sony Ericsson, using Lucene for searching in the public Android defect repository. In line with the original study, we explore how varying the weighting of the title and the description affects the accuracy. Results: We show that Lucene obtains the best results when the defect report title is weighted three times higher than the description, a bigger difference than has been previously acknowledged. Conclusions: Our work shows the potential of using Lucene as a scalable solution for duplicate detection.